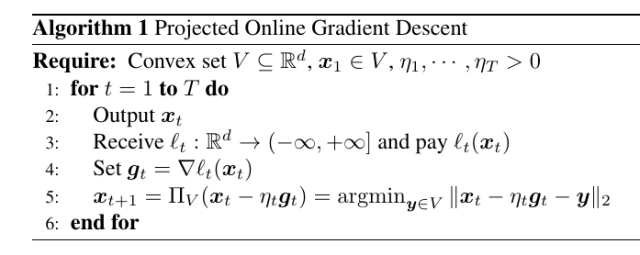

Online Gradient Descent – Parameter-free Learning and Optimization Algorithms

Por um escritor misterioso

Last updated 17 maio 2024

This post is part of the lecture notes of my class "Introduction to Online Learning" at Boston University, Fall 2019. I will publish two lectures per week. You can find the lectures I published till now here. To summarize what we said in the previous note, let's define online learning as the following general game…

Almost Sure Convergence of SGD on Smooth Non-Convex Functions

Stochastic Gradient Descent Algorithm With Python and NumPy – Real

An Introduction To Gradient Descent and Backpropagation In Machine

Stochastic gradient descent for hybrid quantum-classical

Yet Another ICML Award Fiasco – Parameter-free Learning and

Yet Another ICML Award Fiasco – Parameter-free Learning and

Adam is an effective gradient descent algorithm for ODEs. a Using

Gentle Introduction to the Adam Optimization Algorithm for Deep

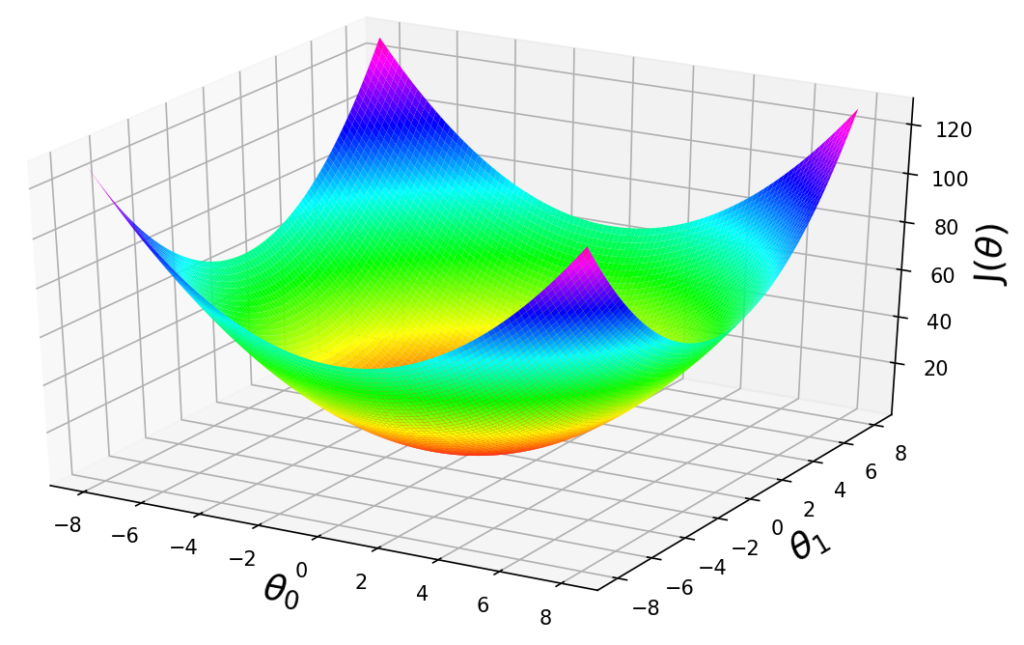

Gradient Descent Algorithm in Machine Learning

Francesco Orabona on LinkedIn: Adapting to Smoothness with

Recomendado para você

-

Gradient descent - Wikipedia17 maio 2024

Gradient descent - Wikipedia17 maio 2024 -

Solved (b) Consider the nonlinear system of equations z +17 maio 2024

Solved (b) Consider the nonlinear system of equations z +17 maio 2024 -

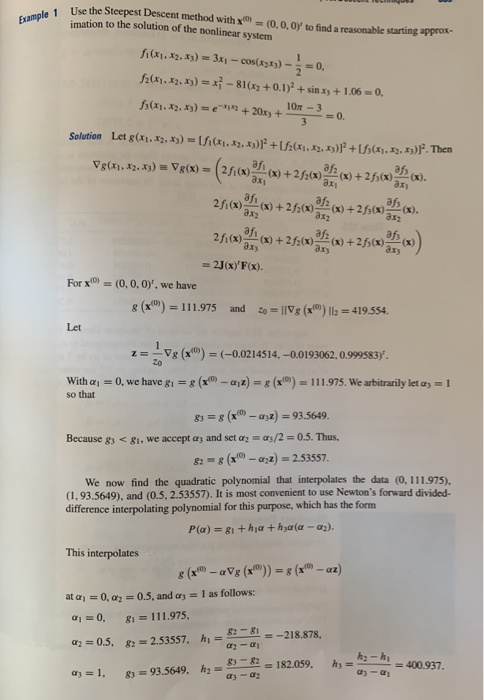

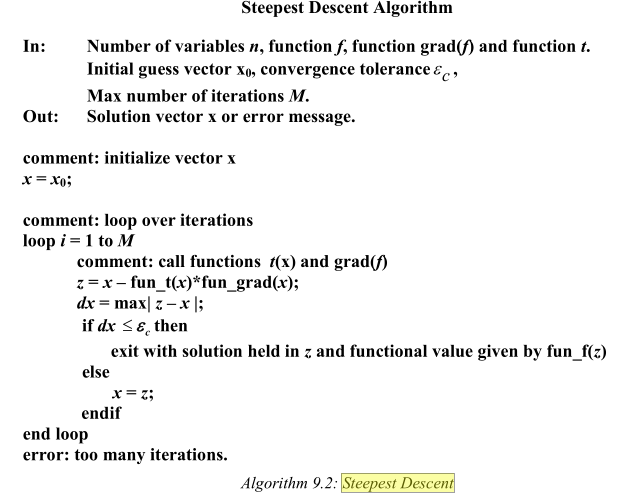

Write a MATLAB program for the steepest descent17 maio 2024

Write a MATLAB program for the steepest descent17 maio 2024 -

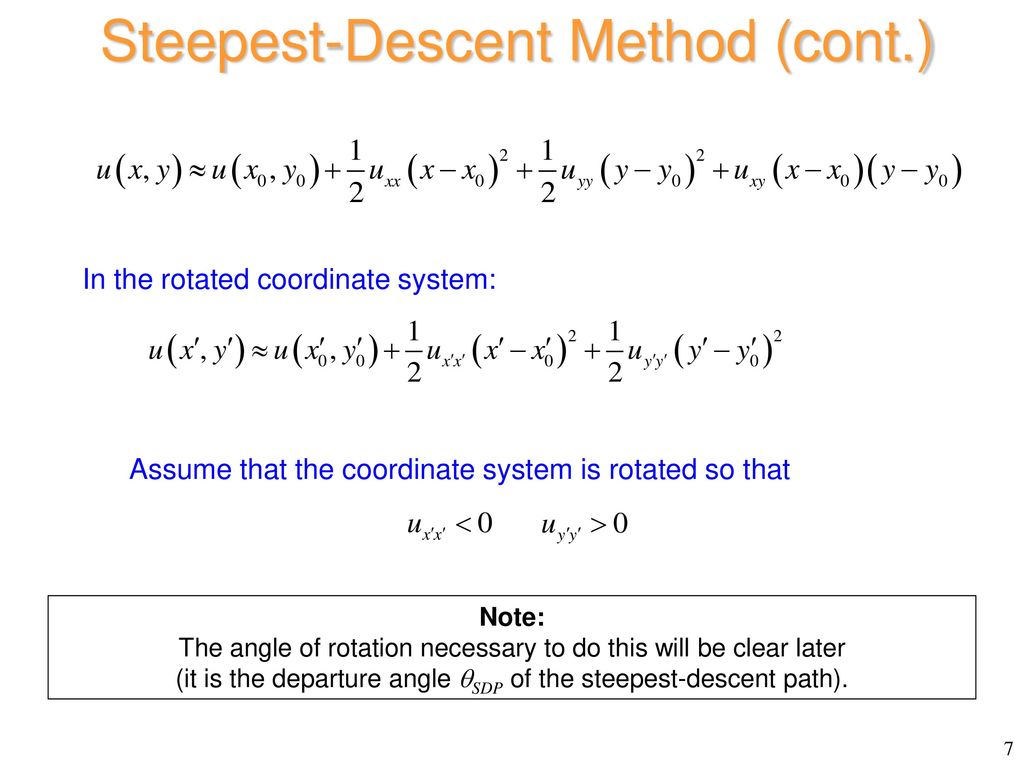

The Steepest-Descent Method - ppt download17 maio 2024

The Steepest-Descent Method - ppt download17 maio 2024 -

optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange17 maio 2024

optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange17 maio 2024 -

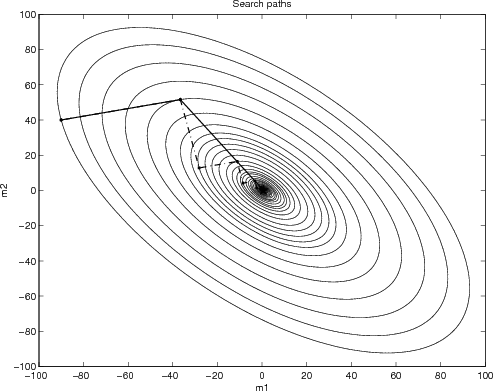

Why steepest descent is so slow17 maio 2024

Why steepest descent is so slow17 maio 2024 -

Guide to Gradient Descent Algorithm: A Comprehensive implementation in Python - Machine Learning Space17 maio 2024

Guide to Gradient Descent Algorithm: A Comprehensive implementation in Python - Machine Learning Space17 maio 2024 -

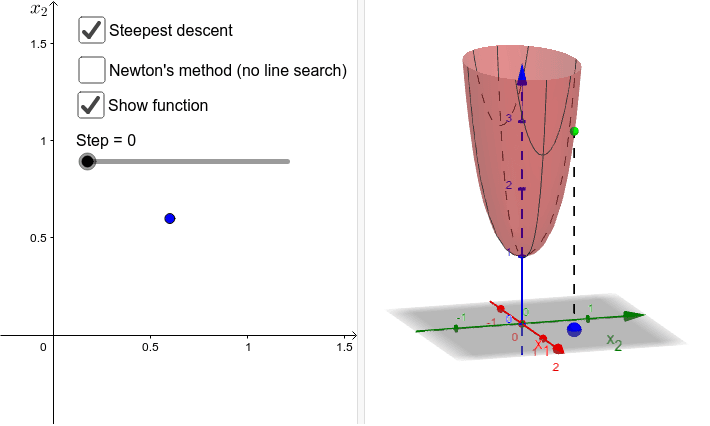

Steepest descent vs gradient method – GeoGebra17 maio 2024

Steepest descent vs gradient method – GeoGebra17 maio 2024 -

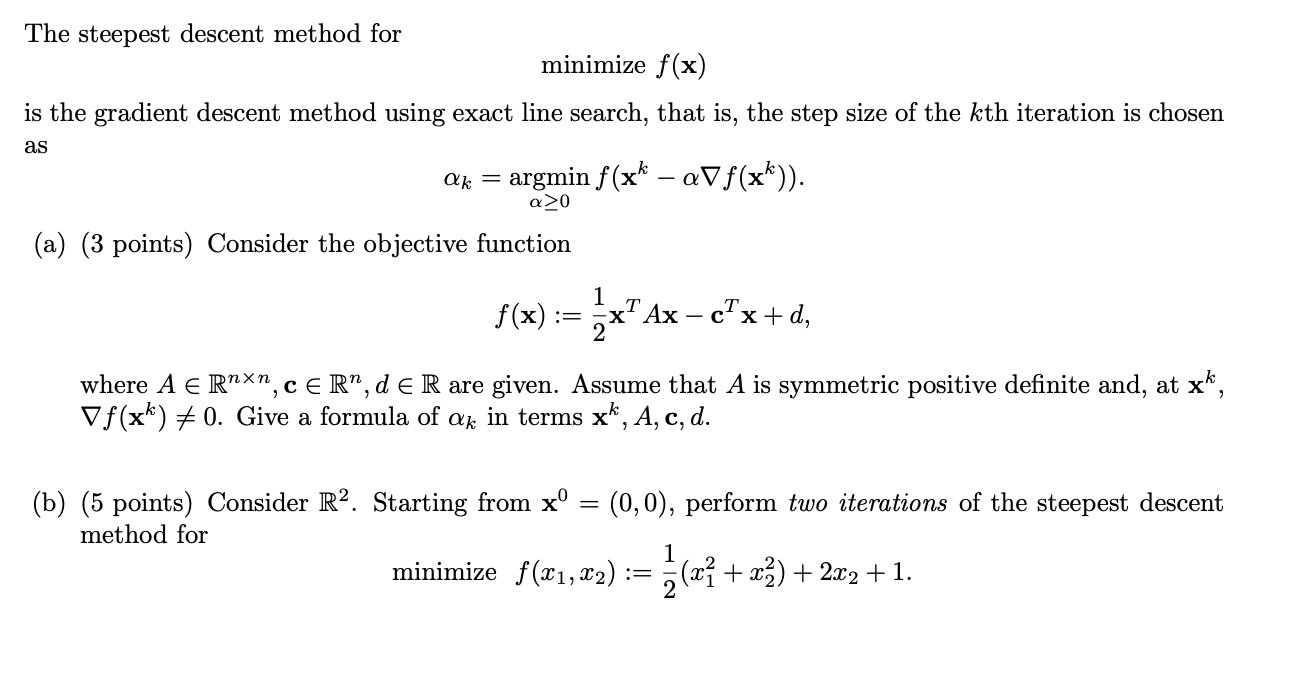

Solved The steepest descent method for minimize f(x) is the17 maio 2024

Solved The steepest descent method for minimize f(x) is the17 maio 2024 -

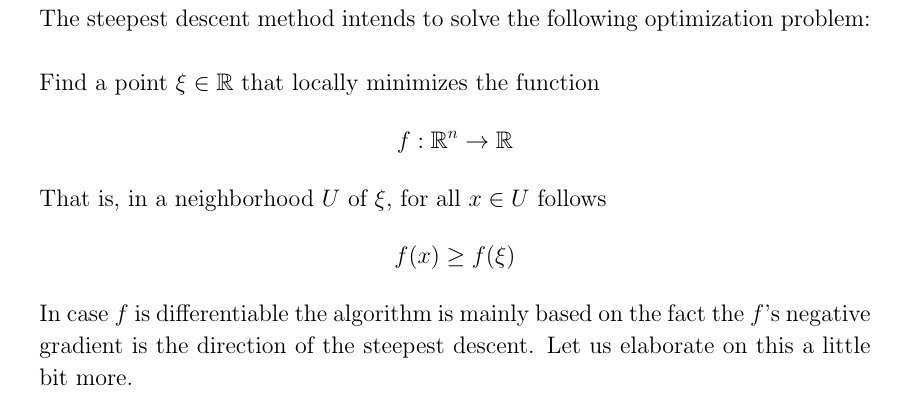

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding17 maio 2024

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding17 maio 2024

você pode gostar

-

Baixa Jogos Para Celular Android17 maio 2024

Baixa Jogos Para Celular Android17 maio 2024 -

Tabuada em Oferta Shopee Brasil 202317 maio 2024

-

Tóquio 2020:Brasil vence a Espanha na prorrogação e é bicampeão olímpico, Olimpíadas17 maio 2024

Tóquio 2020:Brasil vence a Espanha na prorrogação e é bicampeão olímpico, Olimpíadas17 maio 2024 -

File:Juventus FC 2017 logo.png - Wikimedia Commons17 maio 2024

File:Juventus FC 2017 logo.png - Wikimedia Commons17 maio 2024 -

Steam : My FH4 can't play, it shows error code E:88-d - FH4 Troubleshooting - Official Forza Community Forums17 maio 2024

Steam : My FH4 can't play, it shows error code E:88-d - FH4 Troubleshooting - Official Forza Community Forums17 maio 2024 -

Ludo All Star APK for Android Download17 maio 2024

Ludo All Star APK for Android Download17 maio 2024 -

MMBA™️🌙✨ on X: A list of all of the Z-Move Symbols for each of17 maio 2024

MMBA™️🌙✨ on X: A list of all of the Z-Move Symbols for each of17 maio 2024 -

M Diancie-EX, XY—Promo, TCG Card Database17 maio 2024

M Diancie-EX, XY—Promo, TCG Card Database17 maio 2024 -

Manchester City x Crystal Palace: onde assistir ao vivo e online17 maio 2024

Manchester City x Crystal Palace: onde assistir ao vivo e online17 maio 2024 -

Tipos de Xadrez Xadrez escocês, Xadrez, Estampas17 maio 2024

Tipos de Xadrez Xadrez escocês, Xadrez, Estampas17 maio 2024