Visualizing the gradient descent method

Por um escritor misterioso

Last updated 17 maio 2024

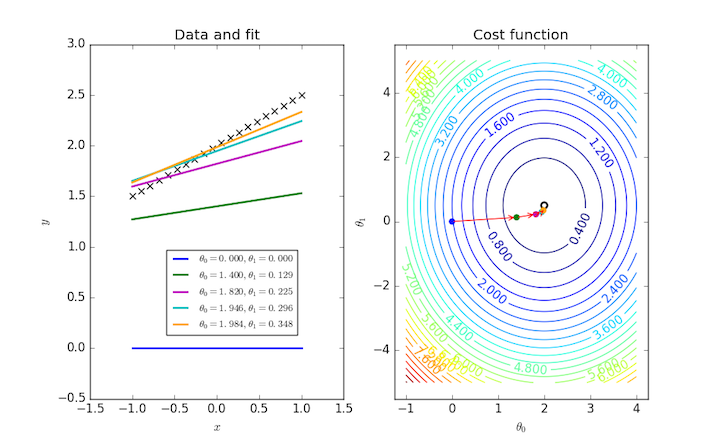

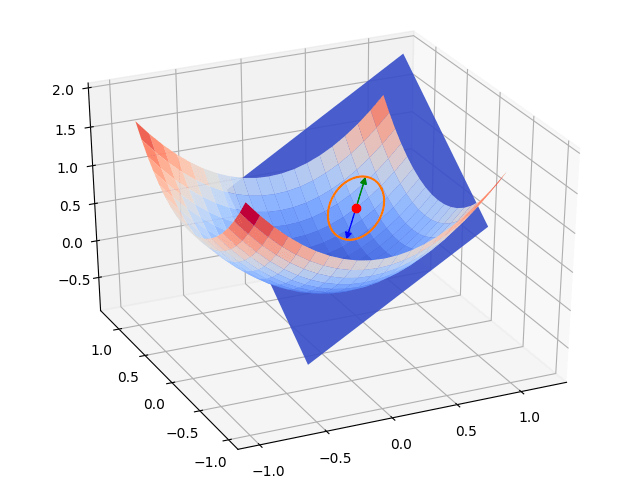

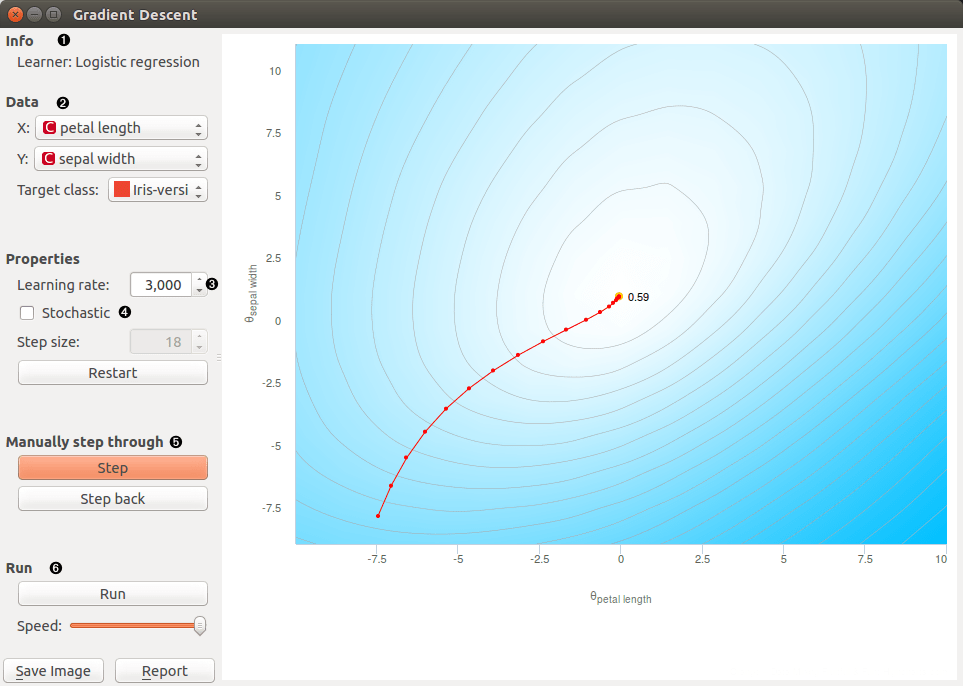

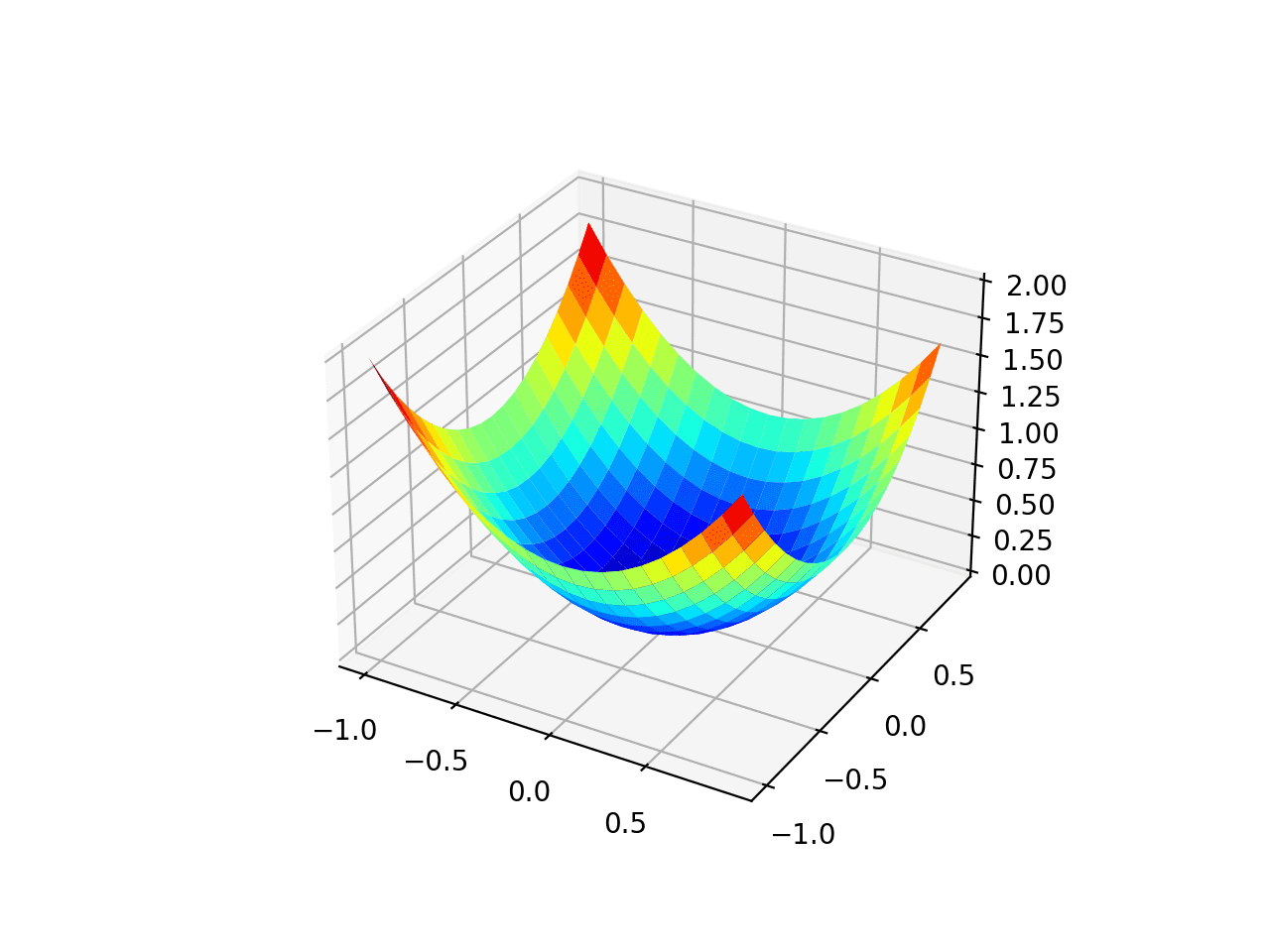

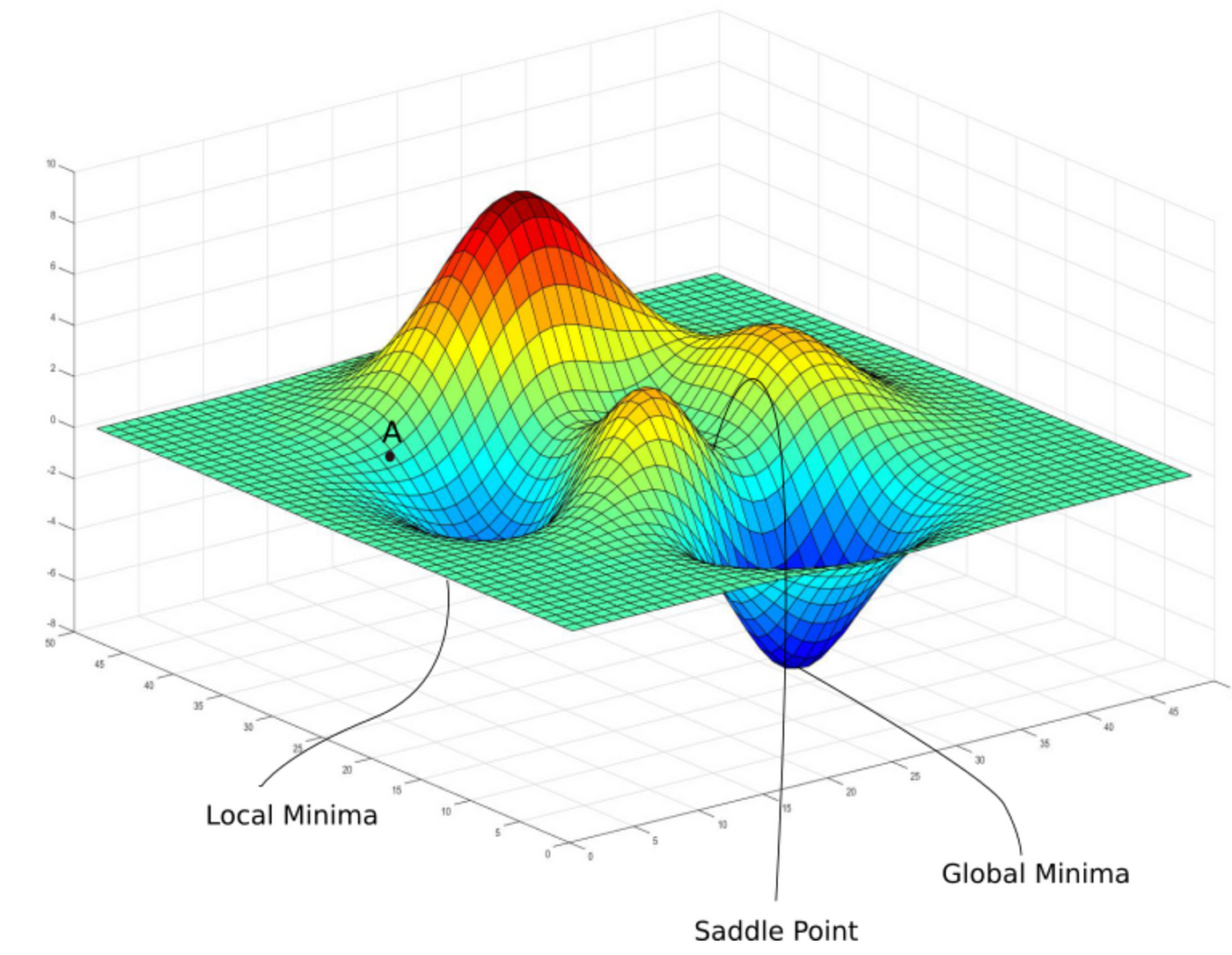

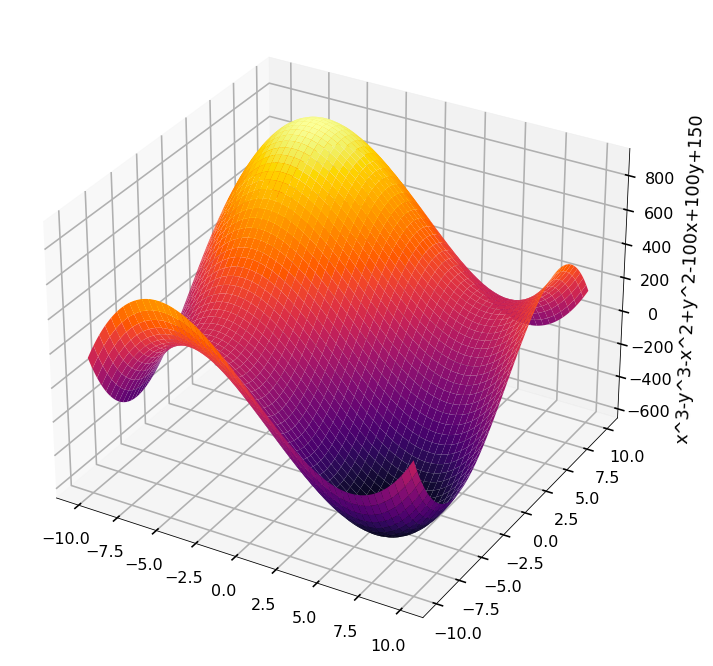

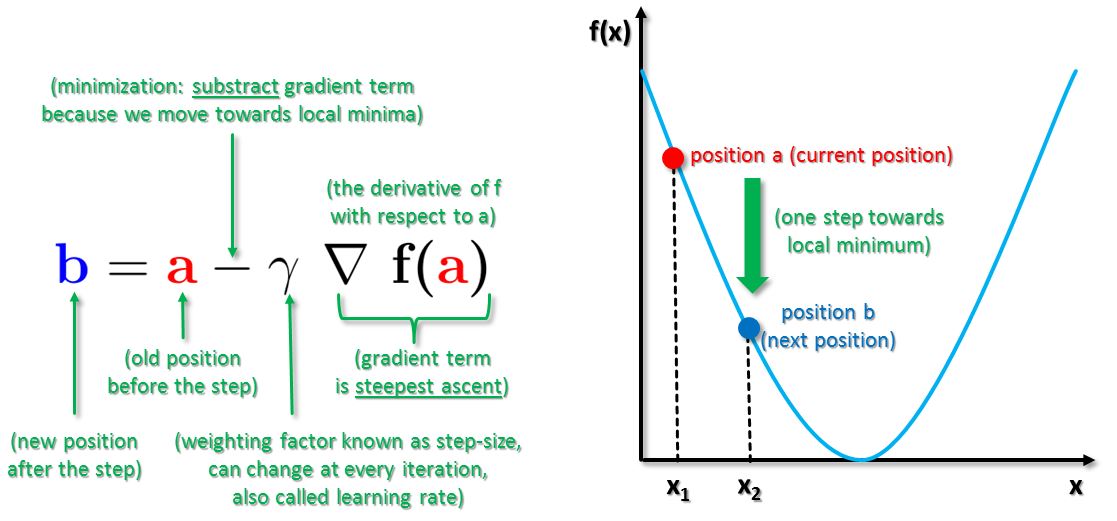

In the gradient descent method of optimization, a hypothesis function, $h_\boldsymbol{\theta}(x)$, is fitted to a data set, $(x^{(i)}, y^{(i)})$ ($i=1,2,\cdots,m$) by minimizing an associated cost function, $J(\boldsymbol{\theta})$ in terms of the parameters $\boldsymbol\theta = \theta_0, \theta_1, \cdots$. The cost function describes how closely the hypothesis fits the data for a given choice of $\boldsymbol \theta$.

How to Visualize Deep Learning Models

How can I imagine / visualize gradient descent with many variables? - Mathematics Stack Exchange

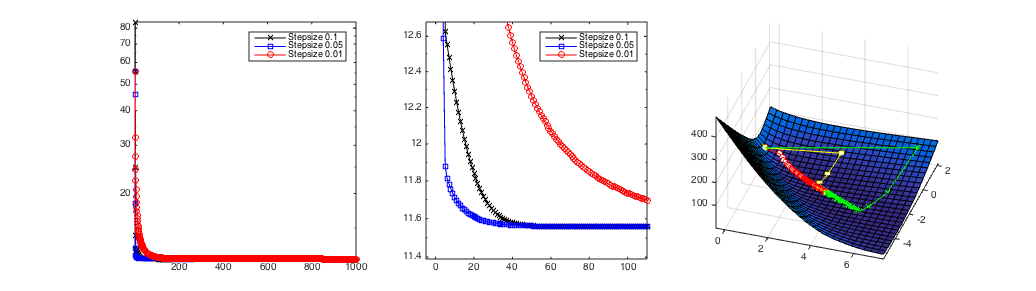

Visualization example of gradient descent algorithm to converge on

Orange Data Mining - Visualizing Gradient Descent

Gradient Descent With AdaGrad From Scratch

Jack McKew's Blog – 3D Gradient Descent in Python

4. A Beginner's Guide to Gradient Descent in Machine Learning, by Yennhi95zz

Visualizing the Gradient Descent Algorithm.

Gradient Descent Visualization - Martin Kondor

Recomendado para você

-

Method of steepest descent - Wikipedia17 maio 2024

-

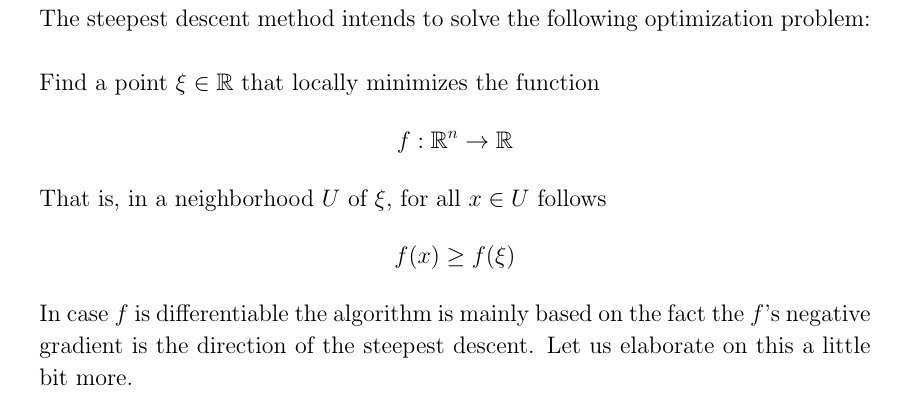

Steepest Descent Method17 maio 2024

Steepest Descent Method17 maio 2024 -

Steepest Descent Method - an overview17 maio 2024

Steepest Descent Method - an overview17 maio 2024 -

matrices - How is the preconditioned conjugate gradient algorithm related to the steepest descent method? - Mathematics Stack Exchange17 maio 2024

matrices - How is the preconditioned conjugate gradient algorithm related to the steepest descent method? - Mathematics Stack Exchange17 maio 2024 -

Lecture 8: Gradient Descent (and Beyond)17 maio 2024

Lecture 8: Gradient Descent (and Beyond)17 maio 2024 -

7: An example of steepest descent optimization steps.17 maio 2024

7: An example of steepest descent optimization steps.17 maio 2024 -

optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange17 maio 2024

optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange17 maio 2024 -

Gradient Descent Big Data Mining & Machine Learning17 maio 2024

Gradient Descent Big Data Mining & Machine Learning17 maio 2024 -

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding17 maio 2024

The Steepest Descent Algorithm. With an implementation in Rust., by applied.math.coding17 maio 2024 -

Solved] . 1. Solve the following using steepest descent algorithm. Start17 maio 2024

você pode gostar

-

Cómo jugar a Subway Surfers gratis en PC y móvil17 maio 2024

Cómo jugar a Subway Surfers gratis en PC y móvil17 maio 2024 -

QUEBRA-CABEÇA VILA DOS DINOSSAUROS COM 104 PEÇAS – Mini Toys17 maio 2024

QUEBRA-CABEÇA VILA DOS DINOSSAUROS COM 104 PEÇAS – Mini Toys17 maio 2024 -

Meta for Business on LinkedIn: Why Creative Differentiation Works for Brands17 maio 2024

-

Jill Valentine - Resident Evil by memory2ashes on DeviantArt17 maio 2024

Jill Valentine - Resident Evil by memory2ashes on DeviantArt17 maio 2024 -

Where To Watch Berserk 1997? ALL WAYS to DO IT!!17 maio 2024

Where To Watch Berserk 1997? ALL WAYS to DO IT!!17 maio 2024 -

NEZUKO VOLTA A SER HUMANO!, Kimetsu no yaiba 3 temporada ep 1117 maio 2024

NEZUKO VOLTA A SER HUMANO!, Kimetsu no yaiba 3 temporada ep 1117 maio 2024 -

Naruto Naruto sketch drawing, Anime drawings tutorials, Naruto17 maio 2024

Naruto Naruto sketch drawing, Anime drawings tutorials, Naruto17 maio 2024 -

Notícias Otaku da Semana: 30 de maio a 5 de junho de 202117 maio 2024

Notícias Otaku da Semana: 30 de maio a 5 de junho de 202117 maio 2024 -

Complaintes - chansons pour ma mélancolie17 maio 2024

Complaintes - chansons pour ma mélancolie17 maio 2024 -

A Devil Fruit Divided: The Artificial Dragon Fruit - One Piece17 maio 2024

A Devil Fruit Divided: The Artificial Dragon Fruit - One Piece17 maio 2024